Deliver AI at scale with secure and supported MLOps

Move from experimentation to production using a modular MLOps platform. Accelerate your project delivery and ensure quick return on investment.

What is MLOps?

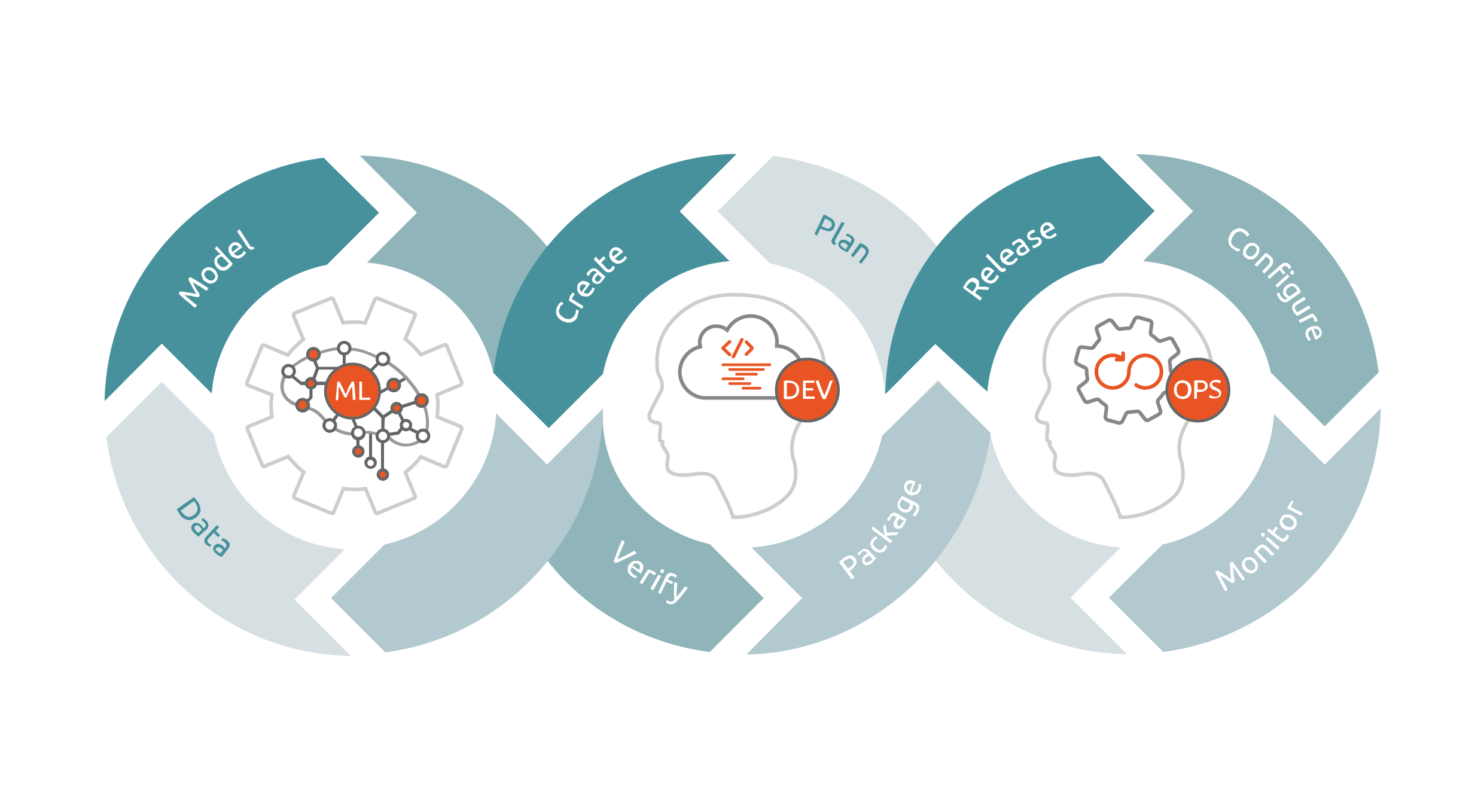

Machine learning operations (MLOps) is like DevOps for machine learning. It is a set of practices that automates machine learning workflows, ensuring scalability, portability and reproducibility.

Why choose Canonical MLOps?

- 10 years of security maintenance

- Easy to deploy and integrate different tools

- Hybrid, multi-cloud experience

- Simple per node subscription

- Automated lifecycle management

End-to-end MLOps with Charmed Kubeflow

Charmed Kubeflow is the foundation of Canonical MLOps. It integrates with various tools including Charmed MLFlow, Canonical Observability Stack and Charmed Spark covering all the stages of the machine learning lifecycle.

MLOps services

Full support for your ML stack

Canonical offers a fully supported machine learning operations solution, with guaranteed SLAs. Get the same level of support across public or private clouds.

Managed MLOps

We run the platform. Your team can focus on developing and deploying models. Streamline operational service delivery and offload the design, implementation and management of your MLOps environment.

Open source MLOps in the public cloud

Get started with machine learning in the public cloud. Use the Charmed Kubeflow appliance on AWS to experiment with MLOps quickly and scale up with Canonical's support.

Get your team up-to-date with an MLOps workshop

Canonical offers a 5-day, on-site workshop for up to 10 participants. It includes architecture building based on your specific use case with a full MLOps architecture proposal at the end of the workshop.

MLOps resources

One of our customers migrated from a legacy platform to Canonical MLOps and reduced their operational costs.

Learn to take models to production using open source MLOps platforms.

Find out how to streamline operations and scale AI initiatives using open source MLOps platforms on NVIDIA DGX.

Run open source MLOps on AWS to remove compute power constraints and start your AI project quickly.

Choosing a suitable machine learning tool can often be challenging. Understand the differences between the most widely-used open source solutions.